+

+ | Face Image |

+ Video |

+ Description |

+

+  |

+  |

+ The video, in a beautifully crafted animated style, features a confident woman riding a horse through a lush forest clearing. Her expression is focused yet serene as she adjusts her wide-brimmed hat with a practiced hand. She wears a flowy bohemian dress, which moves gracefully with the rhythm of the horse, the fabric flowing fluidly in the animated motion. The dappled sunlight filters through the trees, casting soft, painterly patterns on the forest floor. Her posture is poised, showing both control and elegance as she guides the horse with ease. The animation's gentle, fluid style adds a dreamlike quality to the scene, with the woman’s calm demeanor and the peaceful surroundings evoking a sense of freedom and harmony. |

+

+

+  |

+  |

+ The video, in a captivating animated style, shows a woman standing in the center of a snowy forest, her eyes narrowed in concentration as she extends her hand forward. She is dressed in a deep blue cloak, her breath visible in the cold air, which is rendered with soft, ethereal strokes. A faint smile plays on her lips as she summons a wisp of ice magic, watching with focus as the surrounding trees and ground begin to shimmer and freeze, covered in delicate ice crystals. The animation’s fluid motion brings the magic to life, with the frost spreading outward in intricate, sparkling patterns. The environment is painted with soft, watercolor-like hues, enhancing the magical, dreamlike atmosphere. The overall mood is serene yet powerful, with the quiet winter air amplifying the delicate beauty of the frozen scene. |

+

+

+  |

+  |

+ The animation features a whimsical portrait of a balloon seller standing in a gentle breeze, captured with soft, hazy brushstrokes that evoke the feel of a serene spring day. His face is framed by a gentle smile, his eyes squinting slightly against the sun, while a few wisps of hair flutter in the wind. He is dressed in a light, pastel-colored shirt, and the balloons around him sway with the wind, adding a sense of playfulness to the scene. The background blurs softly, with hints of a vibrant market or park, enhancing the light-hearted, yet tender mood of the moment. |

+

+

+  |

+  |

+ The video captures a boy walking along a city street, filmed in black and white on a classic 35mm camera. His expression is thoughtful, his brow slightly furrowed as if he's lost in contemplation. The film grain adds a textured, timeless quality to the image, evoking a sense of nostalgia. Around him, the cityscape is filled with vintage buildings, cobblestone sidewalks, and softly blurred figures passing by, their outlines faint and indistinct. Streetlights cast a gentle glow, while shadows play across the boy's path, adding depth to the scene. The lighting highlights the boy's subtle smile, hinting at a fleeting moment of curiosity. The overall cinematic atmosphere, complete with classic film still aesthetics and dramatic contrasts, gives the scene an evocative and introspective feel. |

+

+

+  |

+  |

+ The video features a baby wearing a bright superhero cape, standing confidently with arms raised in a powerful pose. The baby has a determined look on their face, with eyes wide and lips pursed in concentration, as if ready to take on a challenge. The setting appears playful, with colorful toys scattered around and a soft rug underfoot, while sunlight streams through a nearby window, highlighting the fluttering cape and adding to the impression of heroism. The overall atmosphere is lighthearted and fun, with the baby's expressions capturing a mix of innocence and an adorable attempt at bravery, as if truly ready to save the day. |

+

+

+

+## Resources

+

+通过以下资源了解有关 ConsisID 的更多信息:

+

+- 一段 [视频](https://www.youtube.com/watch?v=PhlgC-bI5SQ) 演示了 ConsisID 的主要功能;

+- 有关更多详细信息,请参阅研究论文 [Identity-Preserving Text-to-Video Generation by Frequency Decomposition](https://hf.co/papers/2411.17440)。

diff --git a/exp_code/1_benchmark/diffusers-WanS2V/docs/source/zh/using-diffusers/schedulers.md b/exp_code/1_benchmark/diffusers-WanS2V/docs/source/zh/using-diffusers/schedulers.md

new file mode 100644

index 0000000000000000000000000000000000000000..8032c1a9890469c5b05d0e7733a64abab4a53551

--- /dev/null

+++ b/exp_code/1_benchmark/diffusers-WanS2V/docs/source/zh/using-diffusers/schedulers.md

@@ -0,0 +1,256 @@

+

+

+# 加载调度器与模型

+

+[[open-in-colab]]

+

+Diffusion管道是由可互换的调度器(schedulers)和模型(models)组成的集合,可通过混合搭配来定制特定用例的流程。调度器封装了整个去噪过程(如去噪步数和寻找去噪样本的算法),其本身不包含可训练参数,因此内存占用极低。模型则主要负责从含噪输入到较纯净样本的前向传播过程。

+

+本指南将展示如何加载调度器和模型来自定义流程。我们将全程使用[stable-diffusion-v1-5/stable-diffusion-v1-5](https://hf.co/stable-diffusion-v1-5/stable-diffusion-v1-5)检查点,首先加载基础管道:

+

+```python

+import torch

+from diffusers import DiffusionPipeline

+

+pipeline = DiffusionPipeline.from_pretrained(

+ "stable-diffusion-v1-5/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True

+).to("cuda")

+```

+

+通过`pipeline.scheduler`属性可查看当前管道使用的调度器:

+

+```python

+pipeline.scheduler

+PNDMScheduler {

+ "_class_name": "PNDMScheduler",

+ "_diffusers_version": "0.21.4",

+ "beta_end": 0.012,

+ "beta_schedule": "scaled_linear",

+ "beta_start": 0.00085,

+ "clip_sample": false,

+ "num_train_timesteps": 1000,

+ "set_alpha_to_one": false,

+ "skip_prk_steps": true,

+ "steps_offset": 1,

+ "timestep_spacing": "leading",

+ "trained_betas": null

+}

+```

+

+## 加载调度器

+

+调度器通过配置文件定义,同一配置文件可被多种调度器共享。使用[`SchedulerMixin.from_pretrained`]方法加载时,需指定`subfolder`参数以定位配置文件在仓库中的正确子目录。

+

+例如加载[`DDIMScheduler`]:

+

+```python

+from diffusers import DDIMScheduler, DiffusionPipeline

+

+ddim = DDIMScheduler.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", subfolder="scheduler")

+```

+

+然后将新调度器传入管道:

+

+```python

+pipeline = DiffusionPipeline.from_pretrained(

+ "stable-diffusion-v1-5/stable-diffusion-v1-5", scheduler=ddim, torch_dtype=torch.float16, use_safetensors=True

+).to("cuda")

+```

+

+## 调度器对比

+

+不同调度器各有优劣,难以定量评估哪个最适合您的流程。通常需要在去噪速度与质量之间权衡。我们建议尝试多种调度器以找到最佳方案。通过`pipeline.scheduler.compatibles`属性可查看兼容当前管道的所有调度器。

+

+下面我们使用相同提示词和随机种子,对比[`LMSDiscreteScheduler`]、[`EulerDiscreteScheduler`]、[`EulerAncestralDiscreteScheduler`]和[`DPMSolverMultistepScheduler`]的表现:

+

+```python

+import torch

+from diffusers import DiffusionPipeline

+

+pipeline = DiffusionPipeline.from_pretrained(

+ "stable-diffusion-v1-5/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True

+).to("cuda")

+

+prompt = "A photograph of an astronaut riding a horse on Mars, high resolution, high definition."

+generator = torch.Generator(device="cuda").manual_seed(8)

+```

+

+使用[`~ConfigMixin.from_config`]方法加载不同调度器的配置来切换管道调度器:

+

+ +

+ 徽章的管道表示模型可以利用 Apple silicon 设备上的 MPS 后端进行更快的推理。欢迎提交 [Pull Request](https://github.com/huggingface/diffusers/compare) 来为缺少此徽章的管道添加它。

+

+🤗 Diffusers 与 Apple silicon(M1/M2 芯片)兼容,使用 PyTorch 的 [`mps`](https://pytorch.org/docs/stable/notes/mps.html) 设备,该设备利用 Metal 框架来发挥 MacOS 设备上 GPU 的性能。您需要具备:

+

+- 配备 Apple silicon(M1/M2)硬件的 macOS 计算机

+- macOS 12.6 或更高版本(推荐 13.0 或更高)

+- arm64 版本的 Python

+- [PyTorch 2.0](https://pytorch.org/get-started/locally/)(推荐)或 1.13(支持 `mps` 的最低版本)

+

+`mps` 后端使用 PyTorch 的 `.to()` 接口将 Stable Diffusion 管道移动到您的 M1 或 M2 设备上:

+

+```python

+from diffusers import DiffusionPipeline

+

+pipe = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5")

+pipe = pipe.to("mps")

+

+# 如果您的计算机内存小于 64 GB,推荐使用

+pipe.enable_attention_slicing()

+

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+image

+```

+

+

徽章的管道表示模型可以利用 Apple silicon 设备上的 MPS 后端进行更快的推理。欢迎提交 [Pull Request](https://github.com/huggingface/diffusers/compare) 来为缺少此徽章的管道添加它。

+

+🤗 Diffusers 与 Apple silicon(M1/M2 芯片)兼容,使用 PyTorch 的 [`mps`](https://pytorch.org/docs/stable/notes/mps.html) 设备,该设备利用 Metal 框架来发挥 MacOS 设备上 GPU 的性能。您需要具备:

+

+- 配备 Apple silicon(M1/M2)硬件的 macOS 计算机

+- macOS 12.6 或更高版本(推荐 13.0 或更高)

+- arm64 版本的 Python

+- [PyTorch 2.0](https://pytorch.org/get-started/locally/)(推荐)或 1.13(支持 `mps` 的最低版本)

+

+`mps` 后端使用 PyTorch 的 `.to()` 接口将 Stable Diffusion 管道移动到您的 M1 或 M2 设备上:

+

+```python

+from diffusers import DiffusionPipeline

+

+pipe = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5")

+pipe = pipe.to("mps")

+

+# 如果您的计算机内存小于 64 GB,推荐使用

+pipe.enable_attention_slicing()

+

+prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+image

+```

+

+ +

+欢迎查看Optimum Neuron [文档](https://huggingface.co/docs/optimum-neuron/en/inference_tutorials/stable_diffusion#generate-images-with-stable-diffusion-models-on-aws-inferentia)中更多不同用例的指南和示例!

\ No newline at end of file

diff --git a/exp_code/1_benchmark/diffusers-WanS2V/docs/source/zh/optimization/onnx.md b/exp_code/1_benchmark/diffusers-WanS2V/docs/source/zh/optimization/onnx.md

new file mode 100644

index 0000000000000000000000000000000000000000..4b3804d0150027cb59bdbc8c351b4d3f4f040271

--- /dev/null

+++ b/exp_code/1_benchmark/diffusers-WanS2V/docs/source/zh/optimization/onnx.md

@@ -0,0 +1,82 @@

+

+

+# ONNX Runtime

+

+🤗 [Optimum](https://github.com/huggingface/optimum) 提供了兼容 ONNX Runtime 的 Stable Diffusion 流水线。您需要运行以下命令安装支持 ONNX Runtime 的 🤗 Optimum:

+

+```bash

+pip install -q optimum["onnxruntime"]

+```

+

+本指南将展示如何使用 ONNX Runtime 运行 Stable Diffusion 和 Stable Diffusion XL (SDXL) 流水线。

+

+## Stable Diffusion

+

+要加载并运行推理,请使用 [`~optimum.onnxruntime.ORTStableDiffusionPipeline`]。若需加载 PyTorch 模型并实时转换为 ONNX 格式,请设置 `export=True`:

+

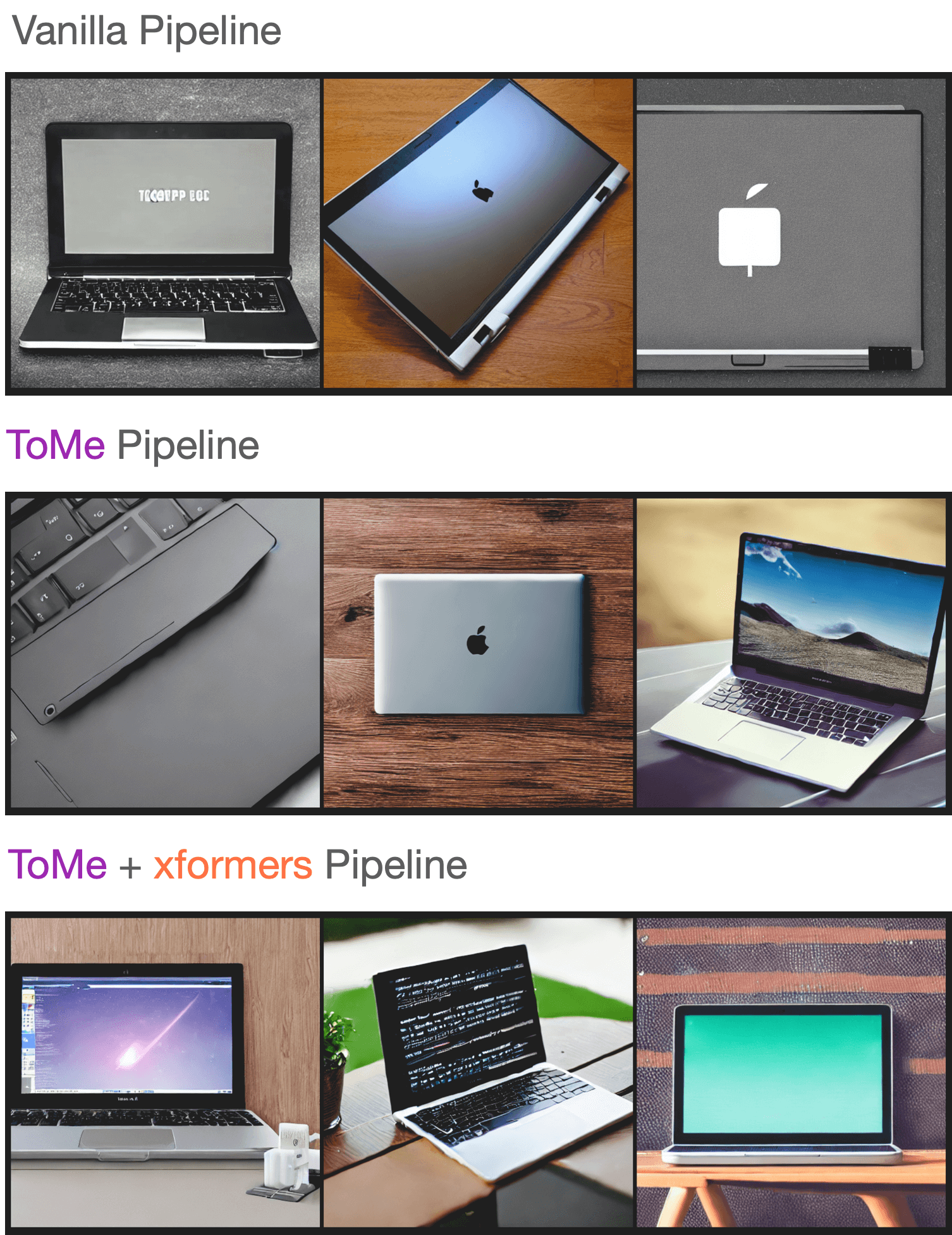

+```python

+from optimum.onnxruntime import ORTStableDiffusionPipeline

+

+model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5"

+pipeline = ORTStableDiffusionPipeline.from_pretrained(model_id, export=True)

+prompt = "sailing ship in storm by Leonardo da Vinci"

+image = pipeline(prompt).images[0]

+pipeline.save_pretrained("./onnx-stable-diffusion-v1-5")

+```

+

+

+

+欢迎查看Optimum Neuron [文档](https://huggingface.co/docs/optimum-neuron/en/inference_tutorials/stable_diffusion#generate-images-with-stable-diffusion-models-on-aws-inferentia)中更多不同用例的指南和示例!

\ No newline at end of file

diff --git a/exp_code/1_benchmark/diffusers-WanS2V/docs/source/zh/optimization/onnx.md b/exp_code/1_benchmark/diffusers-WanS2V/docs/source/zh/optimization/onnx.md

new file mode 100644

index 0000000000000000000000000000000000000000..4b3804d0150027cb59bdbc8c351b4d3f4f040271

--- /dev/null

+++ b/exp_code/1_benchmark/diffusers-WanS2V/docs/source/zh/optimization/onnx.md

@@ -0,0 +1,82 @@

+

+

+# ONNX Runtime

+

+🤗 [Optimum](https://github.com/huggingface/optimum) 提供了兼容 ONNX Runtime 的 Stable Diffusion 流水线。您需要运行以下命令安装支持 ONNX Runtime 的 🤗 Optimum:

+

+```bash

+pip install -q optimum["onnxruntime"]

+```

+

+本指南将展示如何使用 ONNX Runtime 运行 Stable Diffusion 和 Stable Diffusion XL (SDXL) 流水线。

+

+## Stable Diffusion

+

+要加载并运行推理,请使用 [`~optimum.onnxruntime.ORTStableDiffusionPipeline`]。若需加载 PyTorch 模型并实时转换为 ONNX 格式,请设置 `export=True`:

+

+```python

+from optimum.onnxruntime import ORTStableDiffusionPipeline

+

+model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5"

+pipeline = ORTStableDiffusionPipeline.from_pretrained(model_id, export=True)

+prompt = "sailing ship in storm by Leonardo da Vinci"

+image = pipeline(prompt).images[0]

+pipeline.save_pretrained("./onnx-stable-diffusion-v1-5")

+```

+

+ +

+ +

+ +

+ +

+ +

+  +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+

+

+  +

+  +

+  +

+