Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,39 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: mit

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: mit

|

| 3 |

+

datasets:

|

| 4 |

+

- FreedomIntelligence/Huatuo26M-Lite

|

| 5 |

+

- CocoNutZENG/NeuroQABenchmark

|

| 6 |

+

language:

|

| 7 |

+

- zh

|

| 8 |

+

- en

|

| 9 |

+

metrics:

|

| 10 |

+

- accuracy

|

| 11 |

+

base_model:

|

| 12 |

+

- Qwen/Qwen2.5-7B-Instruct

|

| 13 |

+

tags:

|

| 14 |

+

- medical

|

| 15 |

+

---

|

| 16 |

+

## Introduction

|

| 17 |

+

Train LLM to be neuroscientist. It expected to work in Chinese and Engliah environment.

|

| 18 |

+

|

| 19 |

+

## Data

|

| 20 |

+

1. [FreedomIntelligence/Huatuo26M-Lite](https://huggingface.co/datasets/FreedomIntelligence/Huatuo26M-Lite). We select neuroscience(神经科学) label as train data.

|

| 21 |

+

2. [CocoNutZENG/NeuroQABenchmark](https://huggingface.co/datasets/CocoNutZENG/NeuroQABenchmark)

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

## Train Detail

|

| 25 |

+

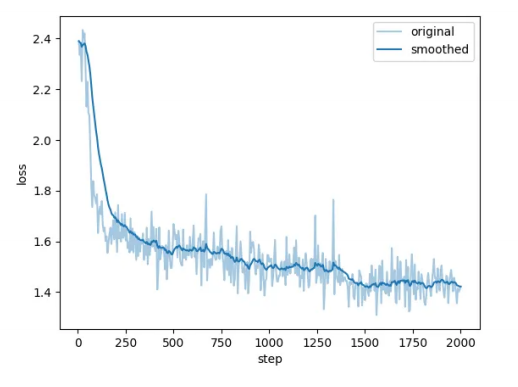

We fine-tuned the Qwen2.5 model using supervised fine-tuning (SFT) with LoRA for efficient parameter optimization. The LoRA configuration employed a rank of 8 (R=8) to

|

| 26 |

+

balance adaptation quality with computational efficiency. Training was conducted for 1 epoch (approximately 1 hour duration) using two NVIDIA A40 GPUs with DeepSpeed’s Stage 2 optimization

|

| 27 |

+

for memory efficiency. We adopted the Adam optimizer with an initial learning rate of 5e-5 and a

|

| 28 |

+

cosine learning rate scheduler for smooth decay. This configuration achieved effective model adaptation while maintaining computational tractability on our hardware setup. Our model’s loss drop as

|

| 29 |

+

expected, see figure below for loss detail.

|

| 30 |

+

[](https://postimg.cc/62FPnJzF)

|

| 31 |

+

|

| 32 |

+

## Evalution

|

| 33 |

+

| Model | Acc |

|

| 34 |

+

|----------------|-------|

|

| 35 |

+

| Qwen2.5-3b | 0.788 |

|

| 36 |

+

| Qwen2.5-7b | 0.820 |

|

| 37 |

+

| +Huatu0Lite | 0.832 |

|

| 38 |

+

| +Full data | 0.848 |

|

| 39 |

+

|